distributed and social reinforcement learning

A large part of the motivation for distributed decision-making in groups of artificial agents (like teams of robots) is the robustness and adaptivity of such systems. This adaptivity can come from learning algorithms, and in particular reinforcement learning (RL). But when more than one agent are learning good behaviors or policies at the same time, the underlying decision process becomes non-stationary. Plus, the agents may have to rely on limited local observations of the environment state, which may lead to a scarcity of experience, and a local, error-prone estimation of the group reward signal. However, under certain conditions agents may use agreement algorithms in combination with a RL algorithm in order to drastically improve their reward and experience estimates and dramatically spead up distributed learning. I have demonstrated this idea with an extension to a direct policy search algorithm for modules in SRMRs learning locomotion gaits.

P. Varshavskaya, L. P. Kaelbling, and D. Rus, Efficient Distributed Reinforcement Learning Through Agreement, 9th International Symposium on Distributed Autonomous Robotic Systems (DARS), Tsukuba Japan, November 2008. [ pdf ]

Agreement between local neighbors may make reinforcement learning easier and faster, but it represents only a first step towards a general understanding of how agents may incorporate other agents' knowledge into their own sequential decision-making. I am currently working on a principled way to incorporate social observation into the RL framework, for what I call social reinforcement learning (SRL).

Related to this initiative is the Social Learning Tournament and the Collective Prediction Project at the CCI, where I am directing the development of AI agents to play on prediction markets.

automating the design of distributed controllers

Designing distributed controllers for self-reconfiguring modular robots has been consistently challenging. I have developed a reinforcement learning approach which can be used both to automate controller design and to adapt robot behavior online.

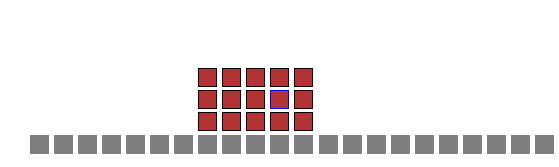

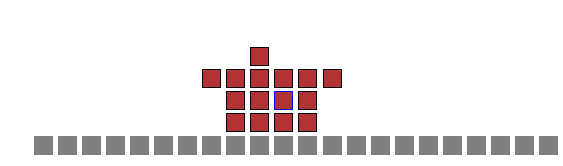

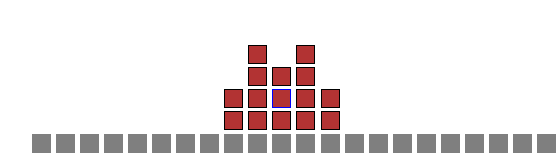

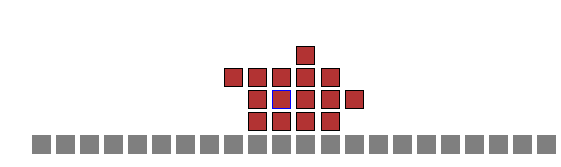

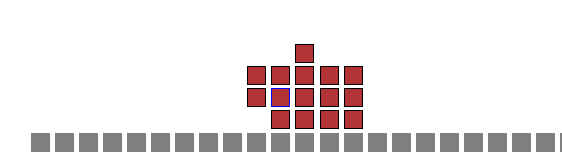

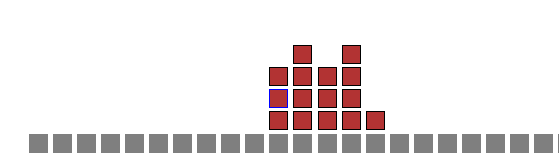

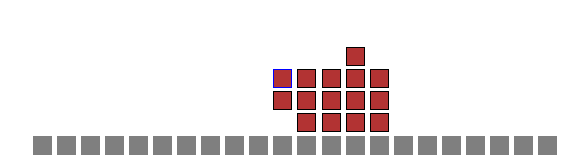

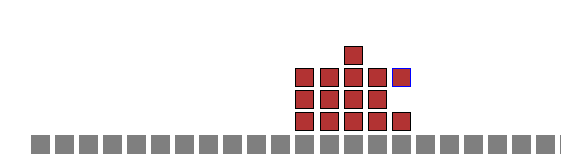

I study reinforcement learning in the domain of self-reconfigurable modular robots: the underlying assumptions, the applicable algorithms, and the issues of partial observability, large search spaces and local optima. I propose and validate experimentally in simulation a number of techniques designed to address these and other scalability issues that arise in applying machine learning to distributed systems such as modular robots. The simulations are based on an abstract kinematic model of lattice-based self-reconfiguring modular robots. Instantiations of such robots in hardware include the Molecule, M-TRAN, and the SuperBot.

|

|

|

|

|

|

|

|

| A planar 15-module SRMR executing a locomotion policy in an abstract kinematic simulation. | |||

Read more about this project below and on the DRL group webpage.

How structure and information affects learning

In what ways can we make learning faster, more robust and amenable to online application? Giving scaffolding to the learning agents in the form of policy representation, structured experience and additional information helps with learning. My position is that with enough structure modular robots can run learning algorithms to both automate the generation of distributed controllers, and adapt to the changing environment and deliver on the self-organization promise with less interference from human designers, programmers and operators.

Agreement algorithms in distributed RL

The more expreience an agent gets the better it is going to learn. In a physically coupled distributed system such as a modular robot, the learning modules that are positioned on the perimeter of the robot have the most freedom of movement. Therefore, they can attempt more actions and get more experience essential for learning. But those modules which are "stuck" on the inside have no way of exploring their actions and the environment. They gather almost no experience and cannot learn a reasonable policy in the allotted time. They need a mechanism for sharing in other agents' experience.

I have studied tasks in which individual modules are all expected to learn a similar policy. Therefore they can share any and all information and experience they gather: observations, actions, rewards, current parameter values. There is a trade-off between exploration and information sharing, as well as a natural limit in the channel bandwidth. One way of sharing information is by agreement algorithms among modules.

Spatial geometric transformations that improve learning in SRMRs

The motion of lattice-based self-reconfiguring modular robots is subject to geometric constraints that we can take into account when designing learning algorithms for them. Experimentally, I have found that modules can learn to create a structure reaching to an arbitrary goal position in the robot's 2D workspace faster if they already have access to a good locomotion policy, provided that a simple transformation (rotation and/or symmetrical flipping about an axis) is applied to the policy, or, equivalently, to every observation and action. Which transformation to apply? This can be answered locally by every module if we assume that it can make local sensory measurements of an underlying function whose optimum is at the goal, and therefore establish the direction of the local gradient.

Distributed learning in swarms

The same principles of distributed gradient ascent reinforcement learning can be applied to groups of robots that are connected through communication links rather than physically. The degrees of freedom in such a system increase markedly when compared to the SRMR case, and therefore this problem is harder.

Additionally, I am modeling groups of autonomous vehicles with continuous controllers and non-holonomic constraints, which result in extremely complex search spaces for the learning algorithms.

distributed control with CPGs

Even control of a single entity such as a non-reconfiguring robot can be cast in distributed terms. The benefits of this approach, which takes inspiration from naturally distributed control in neural systems (like brains and spinal cords), is the kind of robustness and adaptability we observe in natural systems.

In 2003, together with Joerg Conradt of ETH, Zurich, I designed the modular non-reconfiguring snake robot Wormbot, which was controlled in an entirely distributed manner with Central Pattern Generators (CPGs) running oscillators in each module and coupled across modules.

Currently, Ilana Marcus, and undergraduate student at Tufts University, and I are developing CPG-based controllers for non-serpentine robots with different limb and degree-of-freedom topologies.